Your team is already using AI.

Maybe it started with harmless things:

- Summarizing meeting notes

- Cleaning up emails

- Drafting proposals

Then someone pasted a client spreadsheet into ChatGPT.

Someone else uploaded a contract into Copilot.

An analyst dropped financial projections into Gemini.

Productivity shot up.

But here’s the question no one slows down to ask:

What actually happens to your business data once it enters an AI tool?

If you’re an accounting firm, legal practice, healthcare administrator, or PE-backed growth company, this isn’t theoretical. This is governance. This is compliance. This is cyber insurance renewal.

Let’s break it down clearly.

What Happens Technically When You Paste Data Into an AI Tool?

At a high level, four things typically happen:

- The data is transmitted to the AI provider’s cloud infrastructure.

- The system processes it to generate a response.

- The interaction may be logged for monitoring or service improvement.

- Depending on account type and settings, it may or may not be retained or used for model improvement.

Major vendors like OpenAI, Microsoft, and Google publish documentation explaining how enterprise and API data is handled. Those policies differ by plan and configuration.

The nuance matters.

Consumer accounts are not the same as enterprise agreements. Browser sessions are not the same as controlled API deployments. Opt-in settings change retention behavior.

Here’s the uncomfortable part:

If your team is using AI through unmanaged browsers on unmanaged devices, you probably don’t know which rules apply.

And regulators won’t accept “we weren’t sure.”

Is My Business Data Being Stored or Used to Train AI Models?

The honest answer: it depends.

It depends on:

- Whether you are on a consumer or enterprise tier

- Whether training opt-outs are enabled

- Whether data is submitted through API or web interface

- What contractual terms you signed

- How long logs are retained

For compliance-driven professional firms, that level of ambiguity creates risk.

If you handle:

- Tax returns

- Client financials

- Legal drafts

- Healthcare administrative data

- Personally identifiable information

Then AI data security for SMB is no longer a curiosity. It becomes a board-level question.

Because the issue is not whether AI vendors are malicious.

The issue is accountability.

When something goes wrong, who owns the outcome?

Why Is AI Adoption a Bigger Compliance Issue Than It Looks?

AI expands your data surface area quietly.

Think about how most teams use it:

- Copy from accounting software

- Paste into AI

- Copy results

- Download to local machine

- Email externally

Now multiply that across 40 employees.

That is shadow data movement.

For firms facing audits or cyber insurance underwriting, this creates three immediate problems:

1. Evidence Gaps

Can you document:

- Where data traveled?

- Who accessed it?

- How long it was retained?

2. Endpoint Risk

If AI access happens from personal laptops, home Wi-Fi, or unmanaged devices, your security perimeter dissolves.

VPN does not solve this.

VPN protects the tunnel. It does not control what happens after the data leaves it.

3. Vendor Escalation Confusion

If there’s a data exposure incident, who responds?

- Your MSP?

- The AI vendor?

- Your cloud provider?

- Your cyber insurer?

Vendor sprawl turns into escalation chaos fast.

How Does This Affect Cyber Insurance and Underwriting?

Underwriters increasingly ask about:

- Access control enforcement

- Logging and monitoring

- Data retention policies

- Backup and recovery discipline

- Endpoint management

If AI usage is decentralized and undocumented, you’ve introduced a variable into your risk profile.

For PE-backed growth companies and compliance-sensitive SMBs, unpredictable risk equals unpredictable cost.

Predictable IT infrastructure costs require predictable control.

Is DIY AI Adoption the Same as a Managed AI Environment?

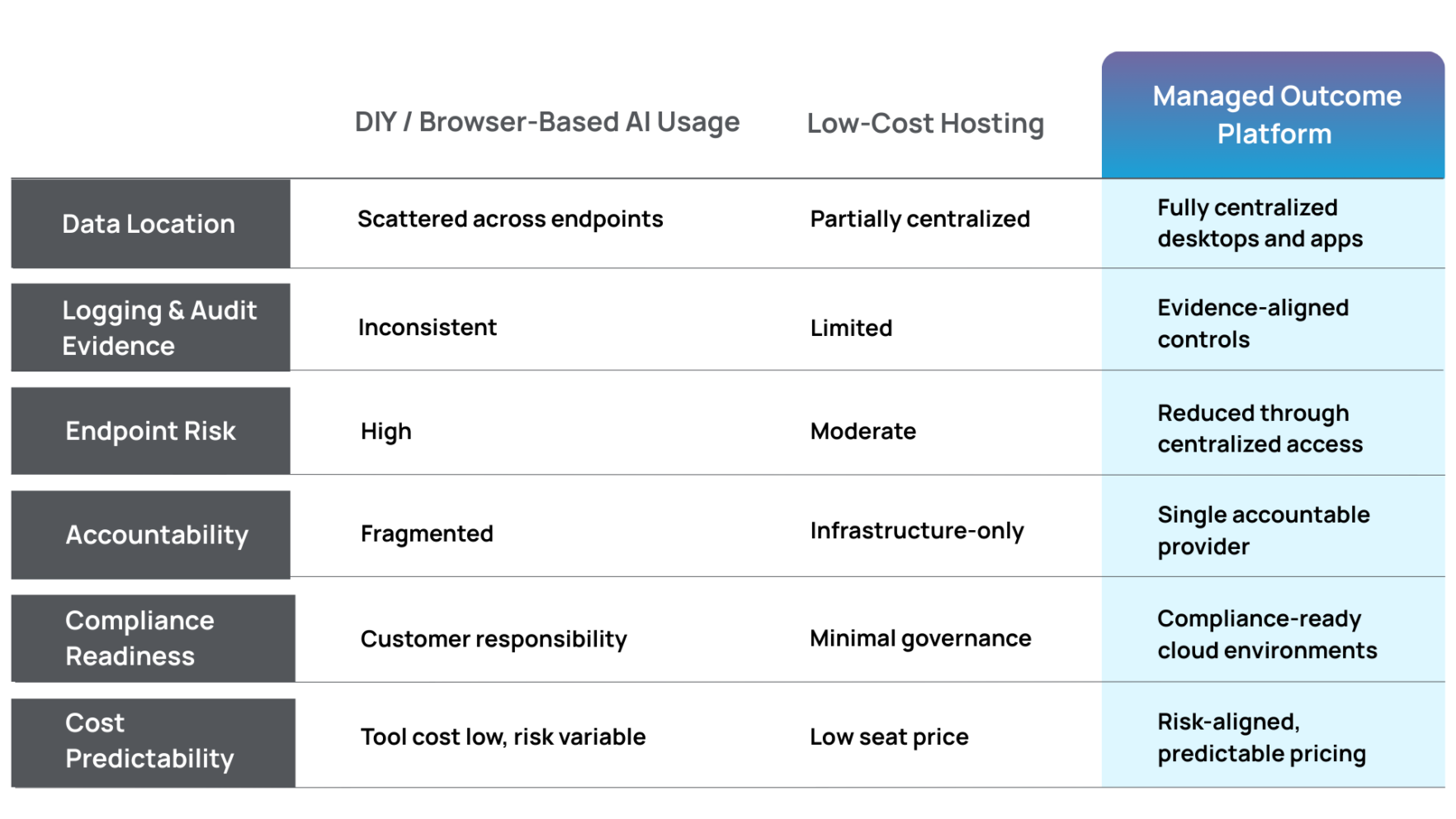

Let’s compare the models directly.

Here’s the pattern:

Tools are cheap. Ownership is expensive.

Managed cloud platforms for SMB environments convert hidden operational risk into defined accountability.

That difference only becomes obvious after something breaks.

Why Does Centralization Matter for AI Data Security?

If your AI usage runs inside a virtual desktop for compliance, the rules change.

In a centralized, managed environment:

- Business data does not live on personal devices

- Role-based access is enforced

- Logging is centralized

- File transfer rules can be controlled

- Session activity is visible

- Backup and recovery are structured

This is how secure remote work without VPN sprawl actually works.

AI becomes a productivity layer inside a governed infrastructure, not an uncontrolled browser shortcut.

That distinction is everything.

What About Legacy and Vertical Applications?

Many SMBs rely on:

- Tax software

- Case management platforms

- Industry-specific Windows applications

- Healthcare administrative systems

These applications were never designed with AI in mind.

So teams export data.

Exports become local files.

Local files become AI prompts.

AI outputs get redistributed.

In application-first hosting environments, data stays centralized. Exports can be restricted. Performance remains consistent. The blast radius shrinks.

If your business depends on one or two mission-critical applications, you cannot afford governance drift.

Are We Overreacting About AI Risk?

AI risk deserves serious attention, and the smartest approach is disciplined governance rather than emotional reaction.

AI adoption is inevitable. It is also manageable.

Treating AI like just another SaaS subscription oversimplifies its impact on data governance and infrastructure control.

It touches your most sensitive information.

If your firm cannot tolerate:

- Audit failure

- Revenue loss from downtime

- Insurance renewal friction

- Reputational damage

Then AI governance belongs inside your infrastructure strategy, not outside it.

What Should SMB Leaders Do Right Now?

Start with five blunt questions:

- Where does our business data physically reside during AI use?

- Are we using enterprise-tier agreements with clear data policies?

- Is AI access centralized or endpoint-driven?

- Can we generate audit evidence if asked tomorrow?

- Who owns the outcome if something breaks?

If those answers feel fuzzy, you have work to do.

The goal is to enable innovation while aligning AI adoption with compliance-ready cloud environments and accountable infrastructure.

Final Thought: AI Is Powerful. So Is Drift.

AI will absolutely improve productivity across accounting firms, legal practices, healthcare administration teams, and distributed SMB workforces.

But productivity without governance creates drift.

Drift creates exposure.

Exposure becomes cost.

The businesses that win over the next five years will be those that pair AI adoption with disciplined control.

- Centralized environments.

- Enforced security.

- Documented accountability.

- Predictable economics.

AI should accelerate your operations in a way that strengthens governance rather than introducing audit exposure.